This assumes you are familar with the basic configurations needed to connect to Amazon Web Services (AWS) using Python boto3. If not, check out my article Python (Scripting) - Getting Started with Amazon Web Services (AWS) boto3.

Here is the minimal boilerplate code without any error handling to upload a file to one of your S3 Buckets. This will upload /tmp/foo.txt on your local system to the root directory in your S3 Bucket.

#!/usr/bin/python3

import boto3

import os

client = boto3.client('s3')

client.upload_file("/tmp/foo.txt", "my-bucket-abc123", "foo.txt")

This will upload /tmp/foo.txt on your local system to the bar directory in your S3 Bucket.

#!/usr/bin/python3

import boto3

import os

client = boto3.client('s3')

client.upload_file("/tmp/foo.txt", "my-bucket-abc123", "bar/foo.txt")

Here is a more practical example, with try/except/else error handling.

#!/usr/bin/python3

import boto3

import sys

try:

client = boto3.client('s3')

except Exception as exception:

print(exception)

sys.exit(1)

try:

client.upload_file("/tmp/foo.txt", "my-bucket-abc123", "foo.txt")

except Exception as exception:

print(exception)

else:

print(f"successfully uploaded {full_path_to_local_file} to {bucket_dest} in my-bucket-abc123")

And here is an example using os.walk to using os.walk to upload all of the files at and below a directory.

#!/usr/bin/python3

import boto3

import os

import re

import sys

try:

client = boto3.client('s3')

except Exception as exception:

print(exception)

sys.exit(1)

for root, dirs, files in os.walk("/tmp"):

for filename in files:

full_path_to_local_file = os.path.join(root, filename)

bucket_dest = re.sub('/tmp', 'backups/', full_path_to_local_file)

try:

client.upload_file(full_path_to_local_file, "my-bucket-abcdefg", bucket_dest)

except Exception as exception:

print(f"got the following exception when attempting to upload {full_path_to_local_file} to {bucket_dest} in my-bucket-abcdefg - {exception}")

else:

print(f"successfully uploaded {full_path_to_local_file} to {bucket_dest} in my-bucket-zplamkinsh")

If you want to upload the file using your own KMS Customer Managed Keys (CMK), include ExtraArgs={"ServerSideEncryption": "aws:kms", "SSEKMSKeyId", "your key id"}.

At a high level, there are 3 types of keys.

- Server Side Encryption Amazon Managed (sse-amz)

- Server Side Encryption Key Management Service (sse-kms)

- Server Side Encryption Customer (sse-c)

Here is how you can upload a file using your own Server Side Encryption Key Management Service (sse-kms).

#!/usr/bin/python3

import boto3

import os

client = boto3.client('s3')

client.upload_file("/tmp/foo.txt",

"my-bucket-abc123",

"foo.txt",

ExtraArgs={"ServerSideEncryption": "aws:kms",

"SSEKMSKeyId": "4802df3b-1b8b-4f7b-af98-61bbf207468d"})

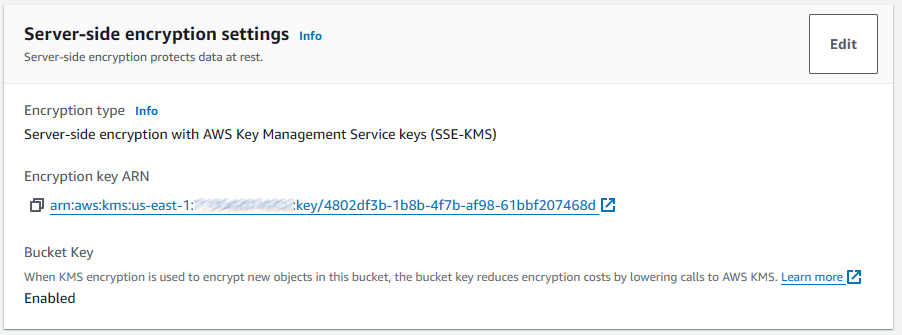

In this scenario, the file in the S3 bucket should contain the KMS key you used.

And here is an example of how you can upload a file using your own Server Side Encryption Customer (sse-c).

client.upload_file("/tmp/bar.txt",

"my-bucket-abc123",

"bar2.txt",

ExtraArgs={

"ServerSideEncryption": "AES256",

"SSECustomerAlgorithm": "AES256",

"SSECustomerKey": "4s6iQXekYL6BxzCZX8Zn3Kr4djK42BSLgb1nP3C7qp0=",

"SSECustomerKeyMD5":"tAasKToBgkFA3Sy43tQjSA=="})

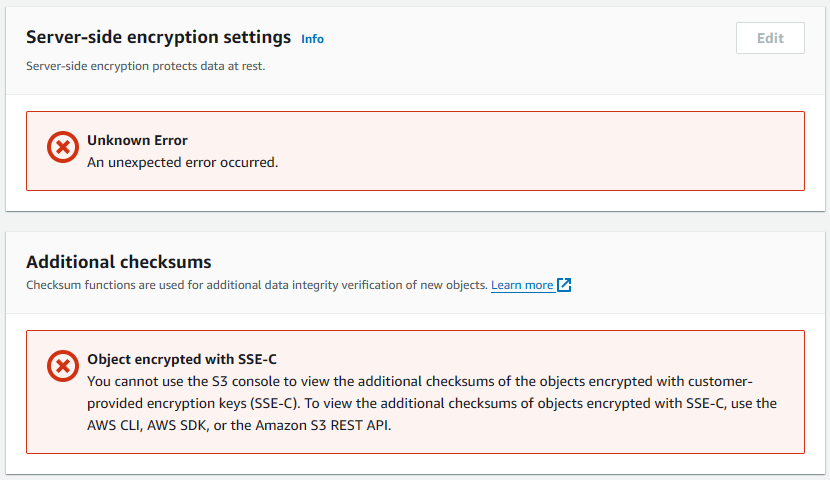

Be aware that if you upload a file to an S3 Bucket with an sse-c, you'll most likely see the following when viewing the object in the S3 console, which is probably OK since you manage your own sse-c keys.

Did you find this article helpful?

If so, consider buying me a coffee over at