This assumes you have already configured the aws command line tool. If not, check out my article on Getting Started with the AWS CLI.

Kinesis is all about streaming. For example, Kinesis can capture logs in real time so that if some system experiences a failure, the logs would not be lost and would be retained in Kinesis. For example, every now and then I had some system just totally hang, like an EC2 instance, and I had to restore the EC2 instance from a snapshot. In this scenario, the logs when the EC2 instance started to hang were totally lost. This is one example of what Kinesis can be used for, to capture logs in real time.

In this Getting Started article, we will.

- Create an on-demand Kinesis Data Stream

- Put Data in Kinesis using the AWS CLI

- Get Data in Kinesis using the AWS CLI

- Install and Setup the Kinesis Java Agent

- Sending Data to Kinesis using the Kinesis Java Agent (Producer)

- Storing Kinesis Data in an S3 Bucket using Firehouse (Consumer)

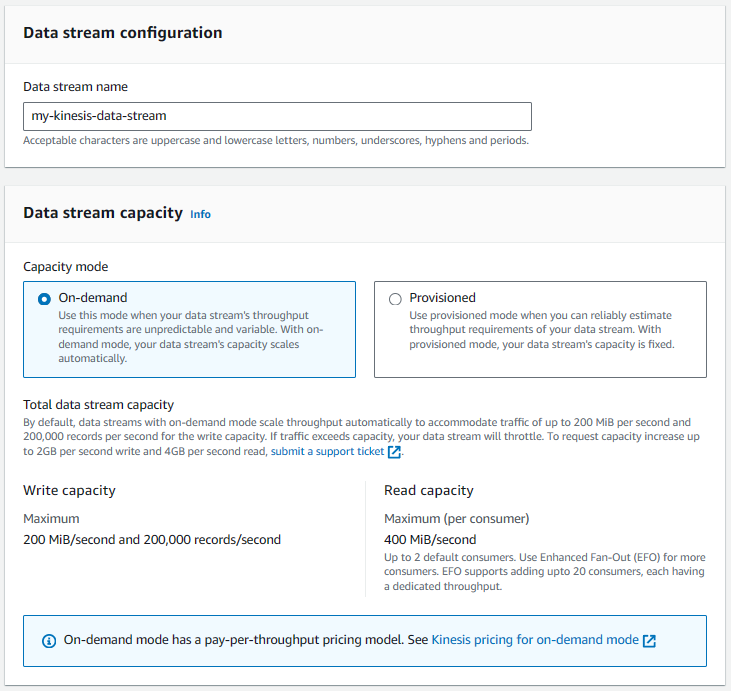

Create an on-demand Kinesis Data Stream

Since this is just a simple Getting Started article, let's create an on-demand data stream.

Or this could be done using the aws kinesis create-stream command.

aws kinesis create-stream --stream-name my-kinesis-data-stream --stream-mode-details '{"StreamMode":"ON_DEMAND"}'

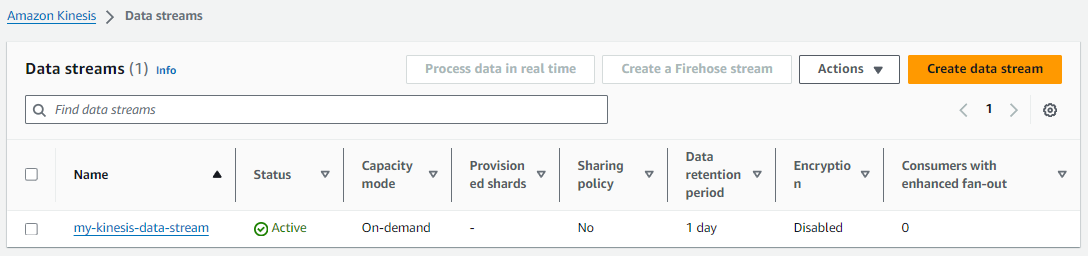

And in a few moments, your Kinesis data stream should be active.

Likewise, the aws kinesis list-streams command should return your stream.

~]$ aws kinesis list-streams

{

"StreamNames": [

"my-kinesis-data-stream"

],

"StreamSummaries": [

{

"StreamName": "my-kinesis-data-stream",

"StreamARN": "arn:aws:kinesis:us-east-1:123456789012:stream/my-kinesis-data-stream",

"StreamStatus": "ACTIVE",

"StreamModeDetails": {

"StreamMode": "ON_DEMAND"

},

"StreamCreationTimestamp": "2024-05-15T01:13:06+00:00"

}

]

}

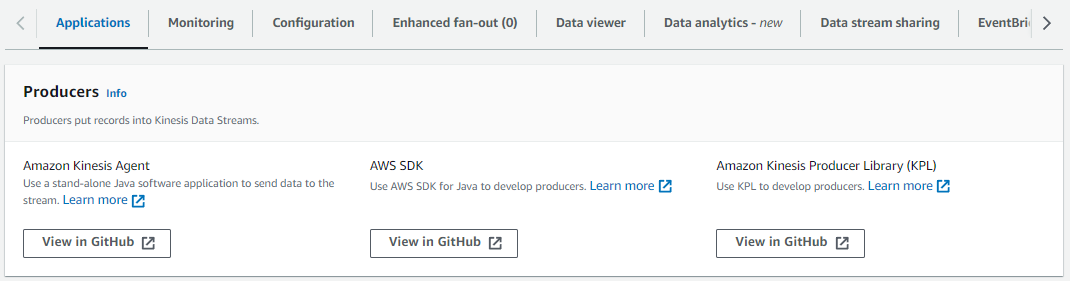

Next you'll configure application to send data to Kinesis. These are known as the Producers. After you select your Kinesis Data Stream the Applications tab displays a few options for how to configure a Producer Application to send data to Kinesis.

Put Data in Kinesis using the AWS CLI

Now, before we get into a practial example, let's first just use the AWS CLI to Put Data in Kinesis. Let's get the base64 of Hello World.

~]$ echo "Hello World" | base64

SGVsbG8gV29ybGQK

Let's get 5 different base64 encoded strings.

~]$ echo a | base64

YQo=

~]$ echo b | base64

Ygo=

~]$ echo c | base64

Ywo=

~]$ echo d | base64

ZAo=

~]$ echo e | base64

ZQo=

Make note of the datetime before putting the records into Kinesis, and then use the aws kinesis put-record command to put the records into our Kinesis Data Stream.

~]$ date

Sat May 18 08:06:35 UTC 2024

~]$ aws kinesis put-record --stream-name my-kinesis-data-stream --partition-key 123 --data YQo=

{

"ShardId": "shardId-000000000000",

"SequenceNumber": "49652152523783921918411693302913525021099805039491284994"

}

~]$ aws kinesis put-record --stream-name my-kinesis-data-stream --partition-key 123 --data Ygo=

{

"ShardId": "shardId-000000000000",

"SequenceNumber": "49652152523783921918411693302914733946919420080982851586"

}

~]$ aws kinesis put-record --stream-name my-kinesis-data-stream --partition-key 123 --data Ywo=

{

"ShardId": "shardId-000000000000",

"SequenceNumber": "49652152523783921918411693302915942872739035259913371650"

}

~]$ aws kinesis put-record --stream-name my-kinesis-data-stream --partition-key 123 --data ZAo=

{

"ShardId": "shardId-000000000000",

"SequenceNumber": "49652152523783921918411693302917151798558650438843891714"

}

~]$ aws kinesis put-record --stream-name my-kinesis-data-stream --partition-key 123 --data ZQo=

{

"ShardId": "shardId-000000000000",

"SequenceNumber": "49652152523783921918411693302918360724378265480335458306"

}

~]$ date

Sat May 18 08:07:11 UTC 2024

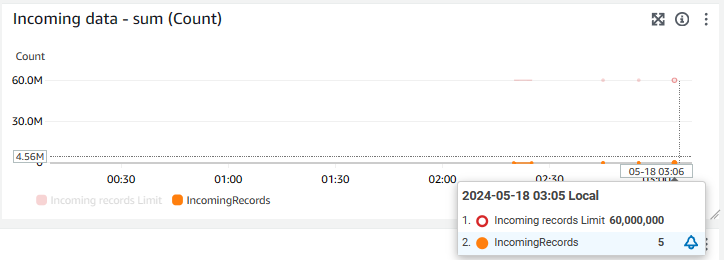

And in the AWS Kinesis console, incoming data - sum (count) should be 5. Looking good!

Get Data in Kinesis using the AWS CLI

The aws kinesis get-shard-iterator command can be used to get the Shard Iterator.

~]$ aws kinesis get-shard-iterator --shard-id shardId-000000000000 --shard-iterator-type TRIM_HORIZON --stream-name my-kinesis-data-stream

{

"ShardIterator": "AAAAAAAAAAEfW0Zybg2XGNf7C/j9I25NCC0egOxYd8RDXGqJMVJ0+zfxz2lmIfsWu+x+P0IZystt75j5cGBtVW1ZFzIJfEXUtEgStUJlv+0A/I1zQR/73yhSzLdaXRFFjsYIljI9jg6a14qJ3b6LMRvwarEvVPYVe7CiPOW6Z8QeihnQ1m/TxbHFiJK3g9eiuJSPNt5YoAJPnpb5nUYng9QcyJYG0Du4cJlMAx3RZMhqkw70zagOoItQ5Hb9v76zq9ns7ojlCb4="

}

And then the aws kinesis get-records command with the Shard Iterator can be used to get the data in the shard. Notice in this example that the shard includes the 5 records. Nice!

~]$ aws kinesis get-records --shard-iterator AAAAAAAAAAEmAWYxVHzUk+h7JE+iB6JIYJVj8TM7ofvcCJI5FiF8h0YIhTLQYt6AIMvKmE5GyU3gFxaEr0dTJfPvaO2ymyNWOKNCR6xHACar5gvGCZBfItRUr6PFjr/QPaUSRpzTof/9OTeiqGBhTHCBf1l8zUxnO02Q3mBRAu3RZuQNglzapuaHm7rWE6U+qTUiGUwoUTfaN8eNzdRKVCs/Wuv9WBx5kZsJXoOwH6mPP10Buc4kRaKgNCq0SYTFUas31VopWsY=

{

"Records": [

{

"SequenceNumber": "49652152523783921918411693302913525021099805039491284994",

"ApproximateArrivalTimestamp": "2024-05-18T08:06:43.571000+00:00",

"Data": "YQo=",

"PartitionKey": "123"

},

{

"SequenceNumber": "49652152523783921918411693302914733946919420080982851586",

"ApproximateArrivalTimestamp": "2024-05-18T08:06:49.189000+00:00",

"Data": "Ygo=",

"PartitionKey": "123"

},

{

"SequenceNumber": "49652152523783921918411693302915942872739035259913371650",

"ApproximateArrivalTimestamp": "2024-05-18T08:06:57.221000+00:00",

"Data": "Ywo=",

"PartitionKey": "123"

},

{

"SequenceNumber": "49652152523783921918411693302917151798558650438843891714",

"ApproximateArrivalTimestamp": "2024-05-18T08:07:04.053000+00:00",

"Data": "ZAo=",

"PartitionKey": "123"

},

{

"SequenceNumber": "49652152523783921918411693302918360724378265480335458306",

"ApproximateArrivalTimestamp": "2024-05-18T08:07:10.461000+00:00",

"Data": "ZQo=",

"PartitionKey": "123"

}

],

"NextShardIterator": "AAAAAAAAAAFppvgEIER5FI4JB0krnt5SwsT4Pqd1dVKrBlAvlT9tbccL1proIDY1JI1e/cwADHnCD8gndEctHyBeUWJgAxoTp0mYx6W1GSK+aTJ3EbGMpH3LSlBTP7ghCIcmyokZ7tYzLoGpoOCKAq1T96ptKoWNO3rCXM1/LSOGivjPEcPB5cUgtrOREmpVb/hubIL/UDmzaIyR/yHEXnlguIoCO9Nj9HEP73M3+1PME7qjL7XOFdEsMPB6yQOs49+SUUzONG0=",

"MillisBehindLatest": 0

}

Install and Setup the Kinesis Java Agent

Now let's make this a bit more practical. Let's say you have mission critical logs that MUST never be lost. The Amazon Kinesis Java Agent can be used to send the logs (or really, any file) to Kinesis. There are a few different ways to install and setup the Amazon Kinesis Java Agent.

- Install and setup Amazon Kinesis Java Agent from GitHub

- Install and setup Amazon Kinesis Java Agent using yum or dnf

- Install and setup Amazon Kinesis Java Agent using Docker

Sending Data to Kinesis using the Kinesis Java Agent

Now let's send some data to Kinesis using the Kinesis Java Agent. During the install and setup of the Kinesis Java Agent, you should have configured agent.json with something like this. In this example, the Kinesis Java Agent will monitor /tmp/app.log and send the logs to my-kinesis-data-stream. There are much better options rather that having your AWS Access Key and Secret Key in agent.json, but again, I'm just trying to keep this simple just to get Kinesis up and working.

~]$ cat /etc/aws-kinesis/agent.json

{

"awsAccessKeyId": "ABK349fJFM499FMDFD23",

"awsSecretAccessKey": "Ab587Cd234Ef98gG9834Aa345908bB23409234Cc",

"flows": [

{

"filePattern": "/tmp/app.log*",

"kinesisStream": "my-kinesis-data-stream"

}

]

}

Let's append Hello and World to /tmp/app.log.

~]$ echo Hello >> /tmp/app.log

~]$ echo World >> /tmp/app.log

/var/log/aws-kinesis-agent/aws-kinesis-agent.log should contain something like this, which shows successfully sent 2 records to destination. Awesome sauce!

2024-05-17 01:28:09.124+0000 (FileTailer[kinesis:my-kinesis-data-stream:/tmp/app.log*].MetricsEmitter RUNNING) com.amazon.kinesis.streaming.agent.tailing.FileTailer [INFO] FileTailer[kinesis:my-kinesis-data-stream:/tmp/app.log*]: Tailer Progress: Tailer has parsed 2 records (12 bytes), transformed 0 records, skipped 0 records, and has successfully sent 2 records to destination.

2024-05-17 01:28:09.130+0000 (Agent.MetricsEmitter RUNNING) com.amazon.kinesis.streaming.agent.Agent [INFO] Agent: Progress: 2 records parsed (12 bytes), and 2 records sent successfully to destinations. Uptime: 90058ms

Storing Kinesis Data in an S3 Bucket using Firehouse (Consumer)

Now let's setup AWS Firehouse to get the records from the Kinesis Data Stream and store the records in an S3 Bucket. There are many options on where the data could be stored.. Again, since this is meant to be just a simple Getting Started article, I'm going with S3 since S3 Buckets easy to setup and configure. Let's use the aws s3api create-bucket command to create an S3 Bucket.

aws s3api create-bucket --bucket my-bucket-abcdefg --region us-east-1

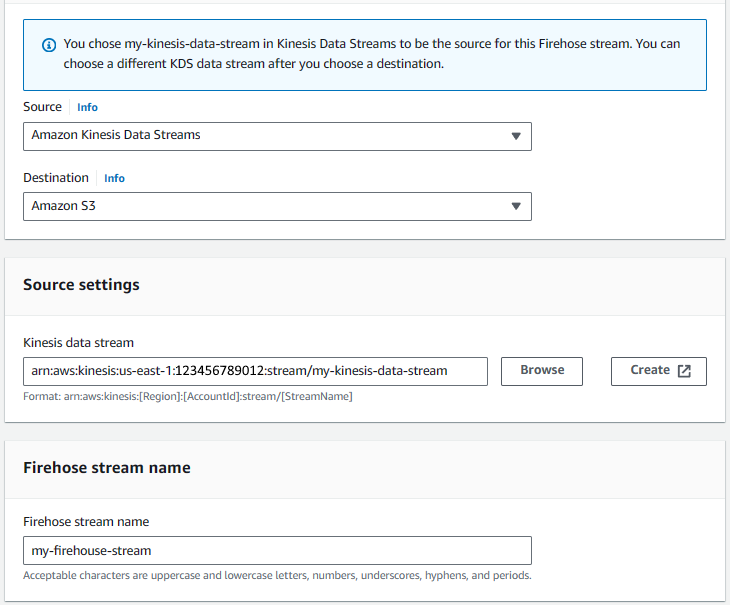

And then let's create a Firehouse Stream that uses our Kinesis Data Stream and the S3 Bucket.

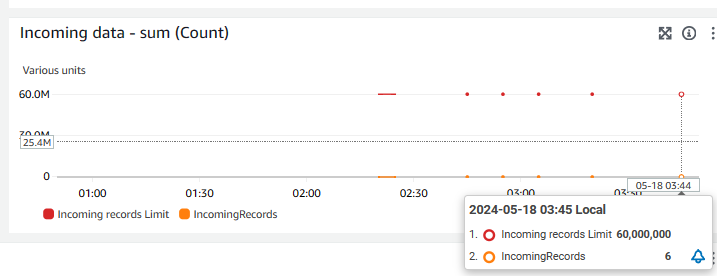

And configure the Firehouse Stream to store records in your S3 Bucket.

Let's append a few lines to /tmp/app.log.2.

~]$ echo Hello >> /tmp/app.log.2

~]$ echo World >> /tmp/app.log.2

~]$ echo my >> /tmp/app.log.2

~]$ echo name >> /tmp/app.log.2

~]$ echo is >> /tmp/app.log.2

~]$ echo Jeremy >> /tmp/app.log.2

/var/log/aws-kinesis-agent/aws-kinesis-agent.log should contain something like this, which shows successfully sent 6 records to destination.

2024-05-18 08:44:47.485+0000 (FileTailer[kinesis:my-kinesis-data-stream:/tmp/app.log*].MetricsEmitter RUNNING) com.amazon.kinesis.streaming.agent.tailing.FileTailer [INFO] FileTailer[kinesis:my-kinesis-data-stream:/tmp/app.log*]: Tailer Progress: Tailer has parsed 12 records (52 bytes), transformed 0 records, skipped 0 records, and has successfully sent 6 records to destination.

2024-05-18 08:44:47.487+0000 (Agent.MetricsEmitter RUNNING) com.amazon.kinesis.streaming.agent.Agent [INFO] Agent: Progress: 12 records parsed (52 bytes), and 6 records sent successfully to destinations. Uptime: 3840062ms

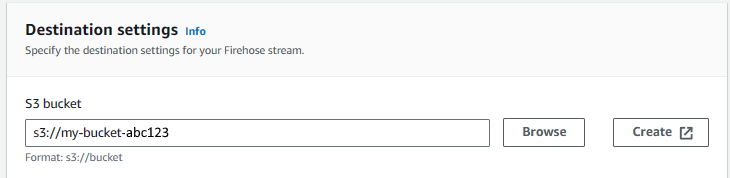

And the Kinesis Monitoring console should show 6 incoming records. Nice - so far, so good.

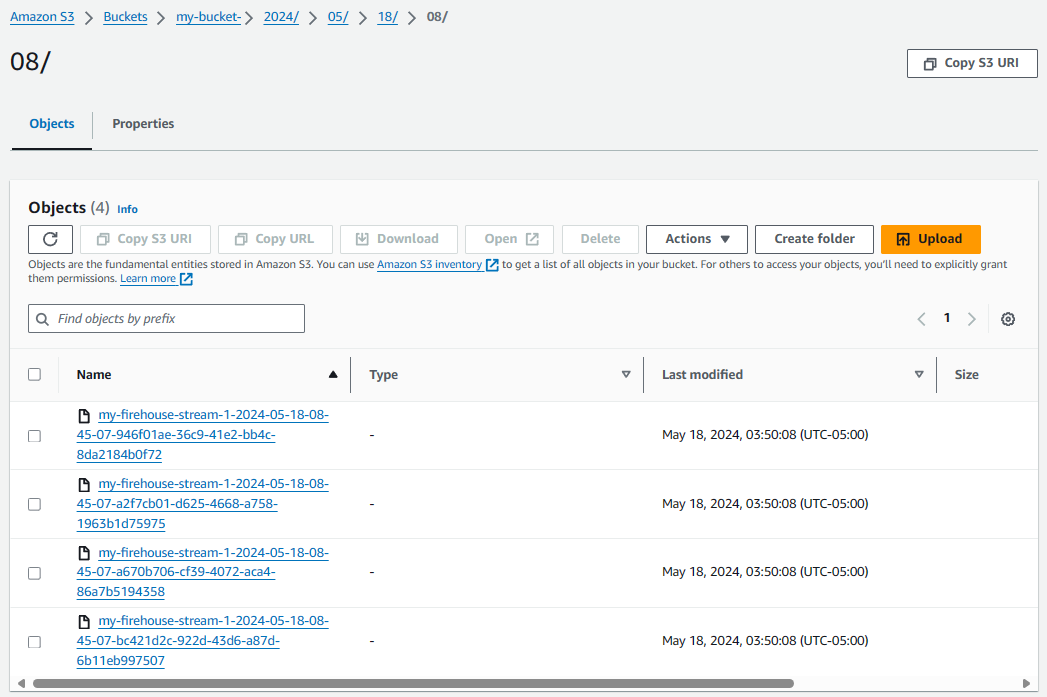

And over in the S3 Bucket, there should be files that contain the lines in /tmp/app.log. I think we have this pieced together now. Pretty cool, eh?

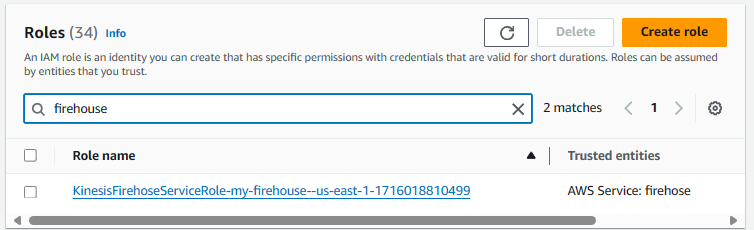

When you create the Firehouse Stream, this will create an IAM Role, so if you are just doing this for proof of concept and you will delete the Firehouse stream, you'll probably want to also delete the IAM Role.

Did you find this article helpful?

If so, consider buying me a coffee over at