I once had a situation where the root partition in one of my EC2 instance was at 99% used. Yikes!

[ec2-user@ip-172-10-11-12 ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 4.0M 0 4.0M 0% /dev

tmpfs 951M 0 951M 0% /dev/shm

tmpfs 381M 5.6M 375M 2% /run

/dev/nvme0n1p1 8.0G 7.8G 162M 99% /

tmpfs 951M 0 951M 0% /tmp

tmpfs 191M 0 191M 0% /run/user/1000

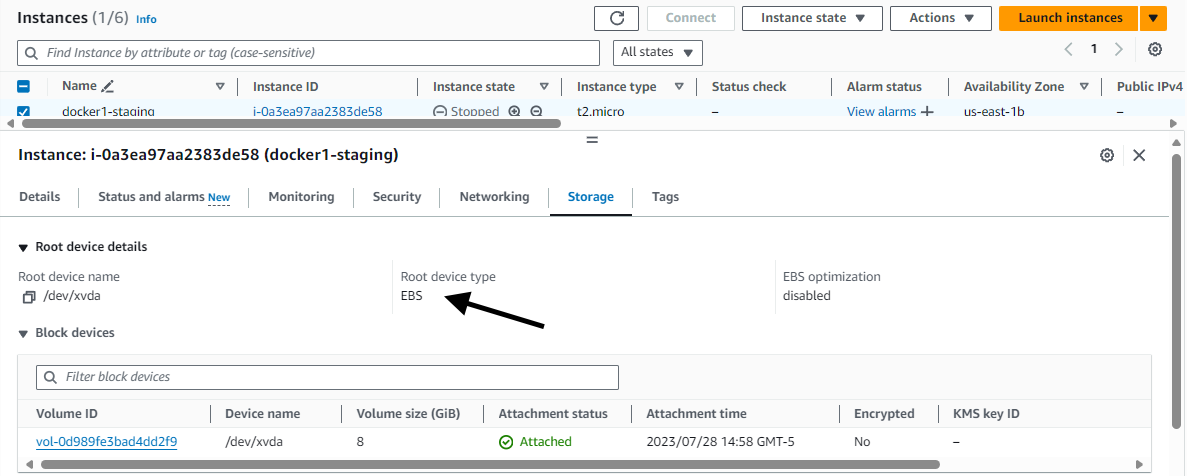

By default, when you create a new EC2 instance, the file system being used by the EC2 instance will be an Elastic Block Storage (EBS) Volume. This can be seen on the Storage tab of your EC2 instance.

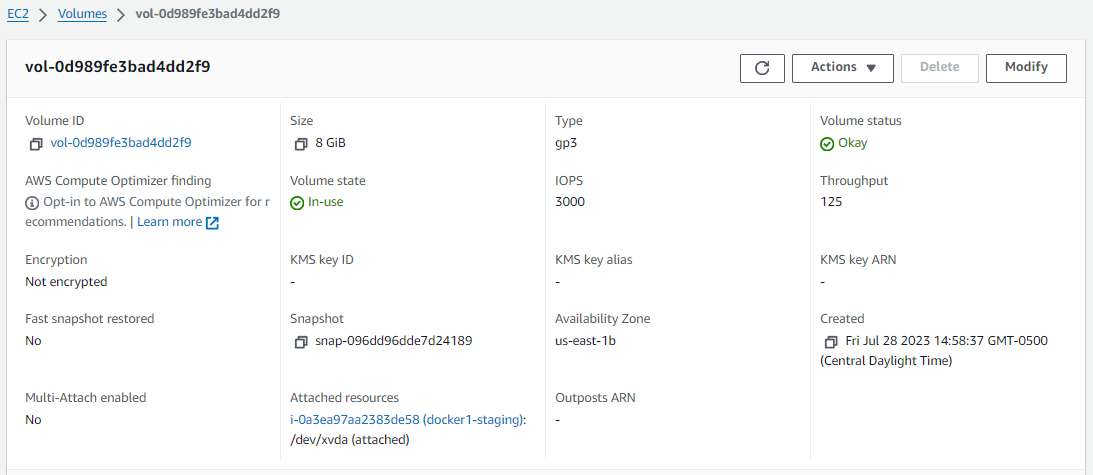

The EBS volume configuration can also be seen in the EC2 console at Elastic Block Storage > Volumes.

Or using the aws ec2 describe-volumes command.

~]$ aws ec2 describe-volumes

[

{

"AvailabilityZone": "us-east-1b",

"Attachments": [

{

"AttachTime": "2023-07-28T19:58:37.000Z",

"InstanceId": "i-0a3ea97aa2383de58",

"VolumeId": "vol-abcdefg123456789",

"State": "attached",

"DeleteOnTermination": true,

"Device": "/dev/xvda"

}

],

"Encrypted": false,

"VolumeType": "gp3",

"VolumeId": "vol-abcdefg123456789",

"State": "in-use",

"Iops": 3000,

"SnapshotId": "snap-096dd96dde7d24189",

"CreateTime": "2023-07-28T19:58:37.544Z",

"MultiAttachEnabled": false,

"Size": 8

}

]

Notice in this example that the size of the EBS Volume is 8 GB, which is the size of the root partition in the df command.

[ec2-user@ip-172-10-11-12 ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 4.0M 0 4.0M 0% /dev

tmpfs 951M 0 951M 0% /dev/shm

tmpfs 381M 5.6M 375M 2% /run

/dev/nvme0n1p1 8.0G 7.8G 162M 99% /

tmpfs 951M 0 951M 0% /tmp

tmpfs 191M 0 191M 0% /run/user/1000

So I updated my EBS Volume from 8 to 16 GB.

aws ec2 modify-volume --volume-type gp3 --size 16 --volume-id vol-abcdefg123456789

Then back on my EC2 instance I used the lsblk command to see that the size of the root volume (nvme0n1 in this example) was increased to 16 GB but the root partition (nvme0n1 in this example) was still 8 GB.

~]$ sudo lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

nvme0n1 259:0 0 16G 0 disk

├─nvme0n1p1 259:1 0 8G 0 part /

├─nvme0n1p127 259:2 0 1M 0 part

└─nvme0n1p128 259:3 0 10M 0 part

Since the size of the root partition (8 GB in this example) is less than the size of the root volume (16 GB in this example) the growpart command can be used to increase the root partition up to the size of the root volume.

~]$ sudo growpart /dev/nvme0n1 1

CHANGED: partition=1 start=24576 old: size=16752607 end=16777183 new: size=33529823 end=33554399

And now the lsblk command shows both the root volume and root partition are 16 GB. Nice!

~]$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

nvme0n1 259:0 0 16G 0 disk

├─nvme0n1p1 259:1 0 16G 0 part /

├─nvme0n1p127 259:2 0 1M 0 part

└─nvme0n1p128 259:3 0 10M 0 part

I then used the df command to get the size, type, and mount point for the file system that I want to extend.

~]$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 4.0M 0 4.0M 0% /dev

tmpfs tmpfs 951M 0 951M 0% /dev/shm

tmpfs tmpfs 381M 5.6M 375M 2% /run

/dev/nvme0n1p1 xfs 8.0G 7.8G 161M 99% /

tmpfs tmpfs 951M 0 951M 0% /tmp

tmpfs tmpfs 191M 0 191M 0% /run/user/1000

Since the filesystem type is XFS, I use this command to grow the XFS file system mounted on / and the last line in the output says "data blocks changed from 2094075 to 4191227". Nice!

~]$ sudo xfs_growfs -d /

meta-data=/dev/nvme0n1p1 isize=512 agcount=2, agsize=1047040 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1 bigtime=1 inobtcount=1

data = bsize=4096 blocks=2094075, imaxpct=25

= sunit=128 swidth=128 blks

naming =version 2 bsize=16384 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=16384, version=2

= sectsz=4096 sunit=4 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data blocks changed from 2094075 to 4191227

And hooray, the / partition is now at 50% used. Awesome sauce!

~]$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 4.0M 0 4.0M 0% /dev

tmpfs 951M 0 951M 0% /dev/shm

tmpfs 381M 5.6M 375M 2% /run

/dev/nvme0n1p1 16G 7.9G 8.2G 50% /

tmpfs 951M 0 951M 0% /tmp

tmpfs 191M 0 191M 0% /run/user/1000

Did you find this article helpful?

If so, consider buying me a coffee over at