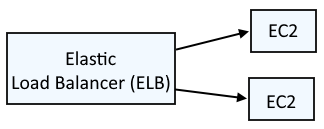

An Elastic Load Balancer (ELB) is typically used to load balance requests across two (or more) different EC2 instances.

There are a few different types of load balancers.

- Application Load Balancers

- Typically used to load balance requests to a web app

- Typically uses the HTTP and HTTPS protocols

- Cannot be bound to an Elastic IP address (static IP address)

- Network Load Balancers

- Typically used to load balance requests to one or more EC2 Instances, or SQL databases or Application Load Balancers

- Typically uses the TCP protocol

- Can be bound to one or more Elastic IP addresses (static IP address)

- Gateway Load Balancers

- Classic Load Balancers (deprecated)

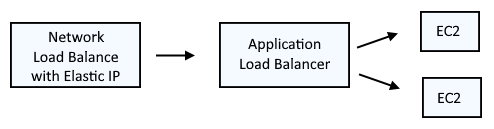

It's important to recognize that an Elastic IP address cannot be assigned to an Application Load Balancer. A common approach is to have a Network Load Balancer with an assigned Elastic IP forward requests onto an Application Load Balancer.

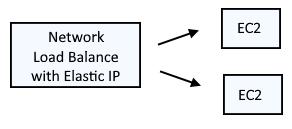

Or to have a Network Load Balance forward requests onto EC2 Instances.

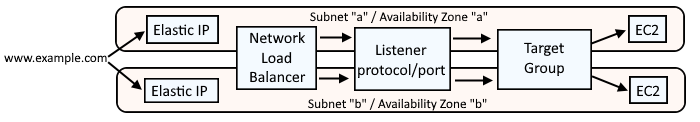

It is also important to recognize that if your Network Load Balancer will be routing requests to EC2 instances is different subnets / availability zones, the Network Load Balancer will need an Elastic IP for each subnet / availability zone.

This assumes you have setup Terraform with the Amazon Web Services (AWS) provider. If not, check out my article Amazon Web Services (AWS) Getting Started with Terraform.

Let's say you have the following files on your Terraform server.

├── required_providers.tf

├── ec2_instances (directory)

│ ├── data.tf

│ ├── outputs.tf

│ ├── provider.tf

├── elastic_ips (directory)

│ ├── data.tf

│ ├── outputs.tf

│ ├── provider.tf

├── network_load_balancers (directory)

│ ├── data.tf

│ ├── listener.tf

│ ├── load_balancer.tf

│ ├── outputs.tf

│ ├── provider.tf

│ ├── remote_states.tf

│ ├── target_group.tf

If your ultimate objective here is to have the Network Load Balancer forward requests onto EC2 Instances, almost always the EC2 Instances will be in different available zones, perhaps like this.

- my-foo-instance = us-east-1a

- my-bar-instance = us-east-1b

In this scenario, the Network Load Balancer will need two Elastic IPs, one mapped to us-east-1a and the other for us-east-1b.

This assumes you have already run the terraform init and then terraform refresh commands in the ec2_instances and elastic_ips directories to create outputs like this.

elastic_ip_allocation_id = eipalloc-000111222333444

elastic_ip_allocation_id = eipalloc-111222333444555

my_foo_instance_subnet = subnet-00011122233344455

my_bar_instance_subnet = subnet-11122233344455566

Then in the remote_states.tf file in the network_load_balancer directory, you are making the ec2_instances and elastic_ips outputs available in the network_load_balancer directory. Check out my article get output variables from terraform.tfstate using terraform_remote_state for more details on this.

data "terraform_remote_state" "ec2_instances" {

backend = "local"

config = {

path = "/usr/local/terraform/aws/ec2_instances/terraform.tfstate"

}

}

data "terraform_remote_state" "elastic_ip" {

backend = "local"

config = {

path = "/usr/local/terraform/aws/elastic_ip/terraform.tfstate"

}

}

required_providers.tf will almost always have this.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

}

}

}

Let's say provider.tf in the network_load_balancer directory has the following. In this example, the "default" profile in /home/username/.aws/config and /home/username/.aws/credentials is being used. This assumes you have setup Terraform as described in Amazon Web Services (AWS) - Getting Started with Terraform.

provider "aws" {

alias = "default"

profile = "default"

region = "default"

}

You will be setting up the Network Load Balancer to forward requests onto targets (typically EC2 instances or Application Load Balancers that target EC2 instance) thus the subnets being used by the EC2 instances will need to be in the same Availability Zone. Let's say data.tf in the ec2_instances directory contains something like this.

]$ cat data.tf

data "aws_instance" "my-foo-instance" {

filter {

name = "tag:Name"

values = ["foo"]

}

}

data "aws_instance" "my-bar-instance" {

filter {

name = "tag:Name"

values = ["bar"]

}

}

And load_balancer.tf could have the following to create a Network Load Balancer.

resource "aws_lb" "my-network-load-balancer" {

name = "my-network-load-balancer"

internal = false

load_balancer_type = "network"

enable_deletion_protection = true

subnet_mapping {

subnet_id = data.terraform_remote_state.ec2_instances.outputs.my_foo_instance.subnet-id

allocation_id = data.terraform_remote_state.elastic_ip.outputs.elastic_ip_allocation_ids[0]

}

subnet_mapping {

subnet_id = data.terraform_remote_state.ec2_instances.outputs.my_bar_instance.subnet-id

allocation_id = data.terraform_remote_state.elastic_ip.outputs.elastic_ip_allocation_ids[1]

}

tags = {

Environment = "staging"

Name = "my-network-load-balancer"

}

}

Almost always, you will also:

- Create Network Load Balancer (ELB) Target Group using Terraform

- Create Network Load Balancer (ELB) Listener using Terraform

- Register Targets in a Target Group using Terraform

The terraform plan command can be used to see what Terraform will try to do.

terraform plan

And the terraform apply command can be used to create the Network Load balancer.

terraform apply

Did you find this article helpful?

If so, consider buying me a coffee over at