This assumes you have already installed the OpenShift API for Data Protection (OADP) Operator

Let's say you want to store OADP backups in an Amazon Web Services S3 Bucket. This assumes you have already created and configured an S3 Bucket. If not, check out my article OpenShift - Store OpenShift API for Data Protection (OADP) objects in an Amazon Web Services (AWS) S3 Bucket.

Let's say the name of your S3 Bucket is my-bucket-asdfadkjsfasfljdf. Let's create a YAML file that contains the following, replacing my-bucket-asdfadkjsfasfljdf with the name of your S3 Bucket.

apiVersion: velero.io/v1

kind: BackupStorageLocation

metadata:

name: my-backup-storage-location

namespace: openshift-adp

spec:

config:

region: us-east-1

profile: velero

credential:

key: cloud

name: cloud-credentials

objectStorage:

bucket: my-bucket-asdfadkjsfasfljdf

provider: aws

default: true

If you have a private URL for accessing S3 Buckets, the s3Url option can be used. Often, when using an s3Url you may also need to provide an SSL certificate that will be used to establish the SSL handshake between OADP and the S3 URL. In this scenario, it's a good idea to include insecureSkipTLSVerify: "false" to ensure TLS verification is not skipped and to then also provide the base64 encoded SSL certificate that can be used to establish the SSL handshake between OADP and the S3 URL.

apiVersion: velero.io/v1

kind: BackupStorageLocation

metadata:

name: my-backup-storage-location

namespace: openshift-adp

spec:

config:

region: us-east-1

profile: velero

s3ForcePathStyle: "true"

s3Url: https://s3.example.com

insecureSkipTLSVerify: "false"

credential:

key: cloud

name: cloud-credentials

objectStorage:

bucket: my-private-bucket-abc123

caCert: <base64 encoded SSL certificate>

provider: aws

default: true

For example, if your S3 URL is https://s3.example.com you could then use then openssl s_client command to get the SSL certificate being presented by https://s3.example.com. Check out my article FreeKB - OpenSSL - View SSL certificate using s_client and showcerts for more details on the openssl s_client command. For example, this one-liner would return the base64 encoded string of the SSL certificate that you would then used in the caCert key in your backup storage location YAML.

echo | openssl s_client -connect s3.example.com:443 -showcerts 2>/dev/null | sed -ne '/-----BEGIN CERTIFICATE-----/,/-----END CERTIFICATE-----/p' | base64 -w0

Let's use the oc apply command to create my-data-protection-application.

oc apply --filename my-data-protection-application.yaml

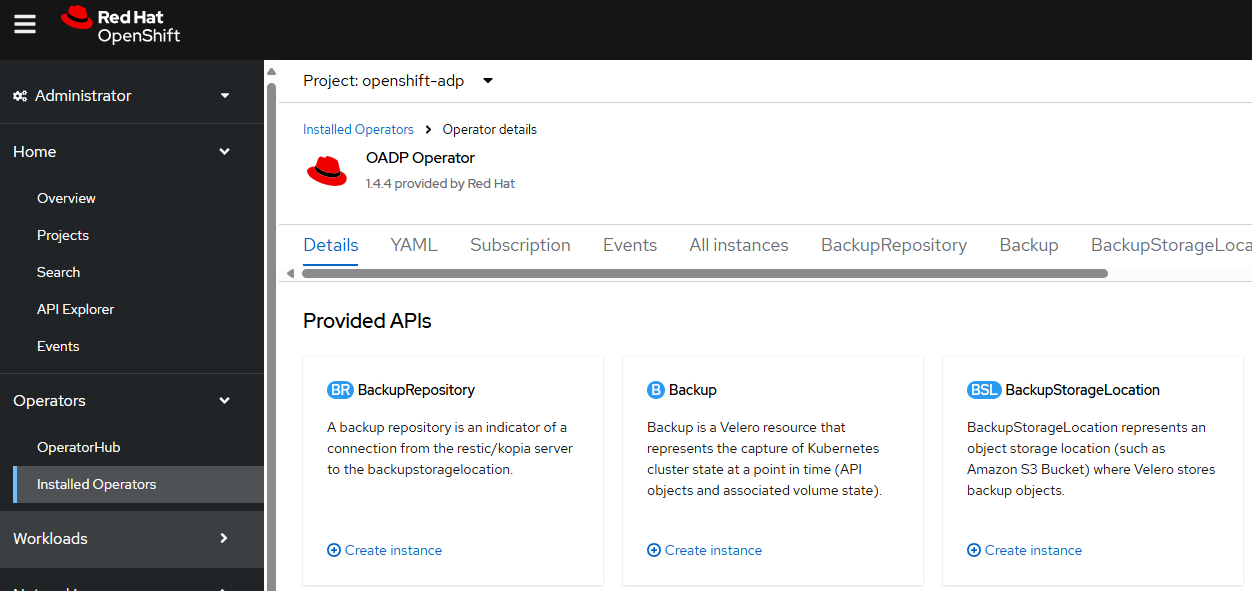

Or in the OpenShift console, at Operators > Installed Operators > OADP select BackupStorageLocation > Create instance.

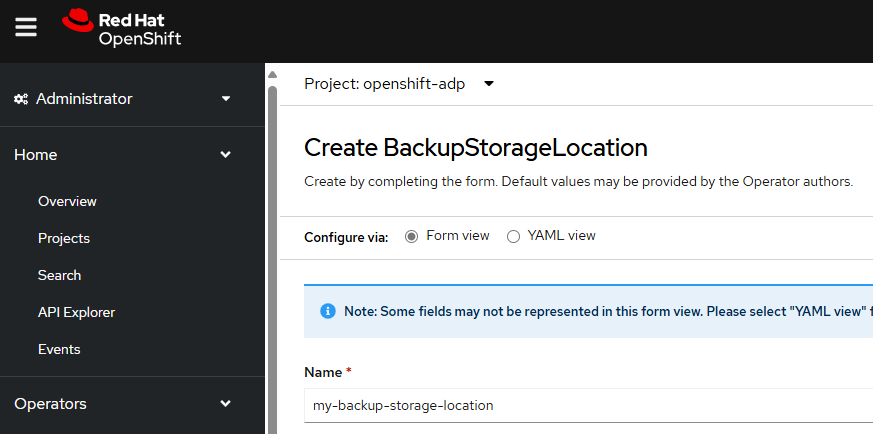

Let's give the Backup Storage Location a meaningful name.

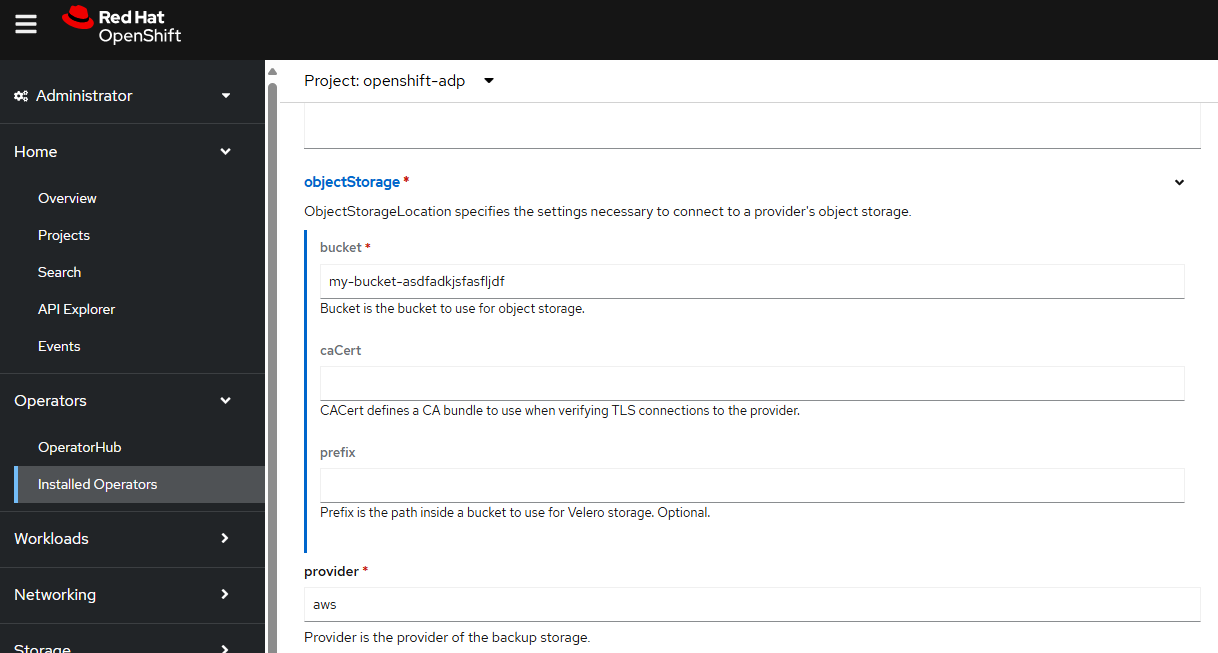

In this example, since the backup are being storage an Amazon Web Services (AWS) S3 Bucket let's enter aws as the provider and also provide the name of the S3 bucket.

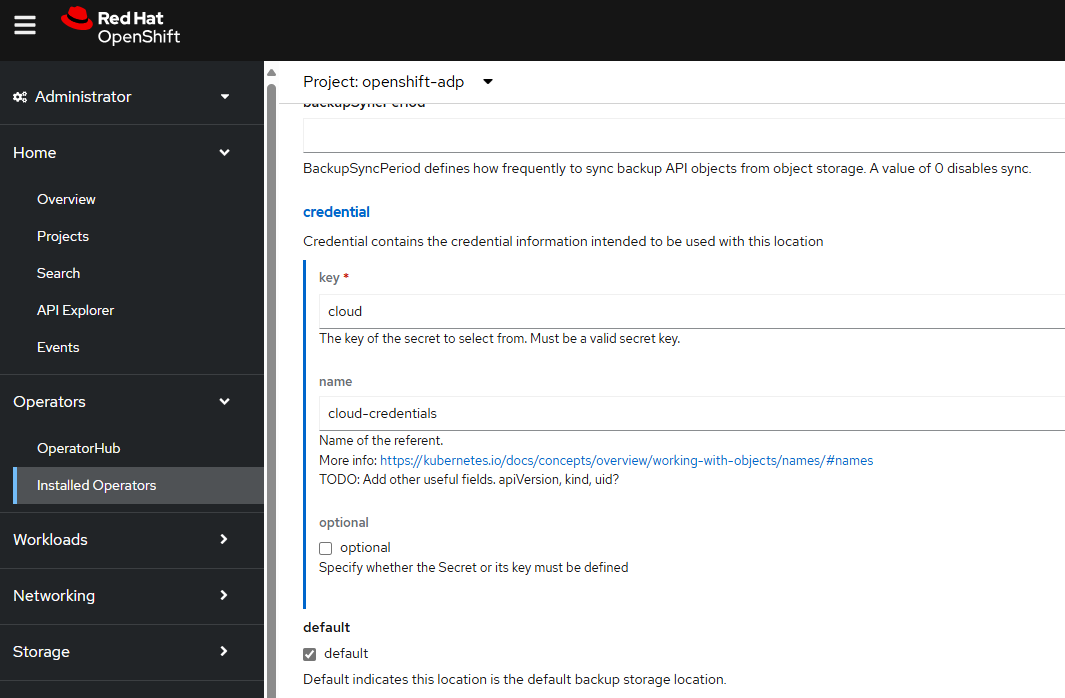

And let's provide the credentials key and value, which is the secret named cloud-credentials.

Let's check to see if the backupStorageLocation is Available.

~]$ oc get backupStorageLocations --namespace openshift-adp

NAME PHASE LAST VALIDATED AGE DEFAULT

my-backup-storage-location Available 60s 64s true

This could also be done using the oc exec command to use the velero CLI in the velero container.

~]$ oc exec deployment/velero --container velero --namespace openshift-adp -- /velero backup-location get

NAME PROVIDER BUCKET/PREFIX PHASE LAST VALIDATED ACCESS MODE DEFAULT

default aws my-backup-storage-location/my-prefix Available 2025-06-10 05:36:19 +0000 UTC ReadWrite true

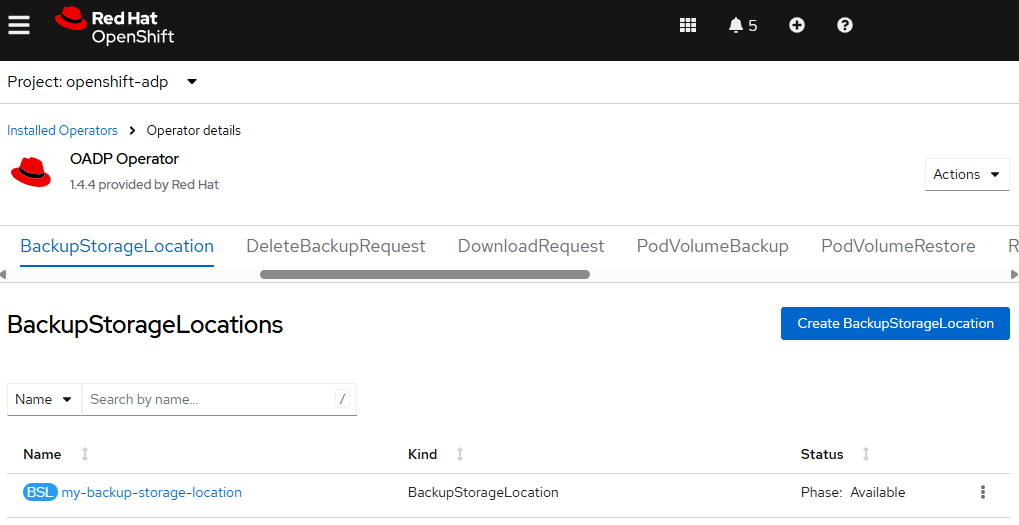

Or in the OpenShift console, at Operators > Installed Operators > OADP select BackupStorageLocations and check to see if the phase is Available.

Did you find this article helpful?

If so, consider buying me a coffee over at