If you are not familiar with the oc command, refer to OpenShift - Getting Started with the oc command.

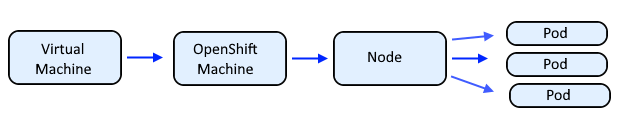

I like to think of a machine as OpenShift representation of a Virtual Machine, such as an Amazon Web Services (AWS) EC2 Instance, or a VMWare Virtual Machine, and then a Node, and then the pods running on the node. Machine Configs can be used to configure the Virtual Machine Operating System, such as configuring a Linux systemd service such as sshd or chronyd or Network Manager.

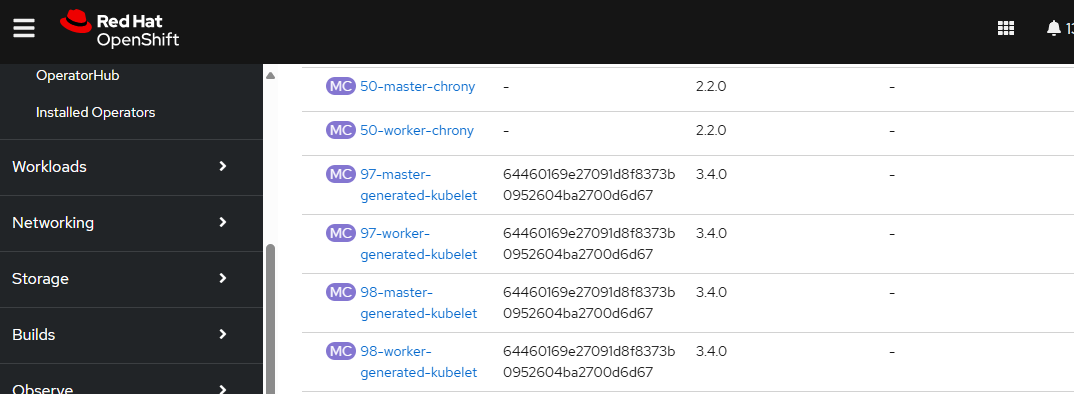

The oc get MachineConfigs command can be used to list the current Machine Configs. Let's say you want to add a new Machine Config or make some change to an existing Machine Config. For example, let's say you want to make some change to Chrony. Notice in this example that there is already a chrony Machine Config named 50-worker-chrony.

~]$ oc get MachineConfigs

NAME GENERATEDBYCONTROLLER IGNITIONVERSION AGE

00-master 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 635d

00-worker 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 635d

01-master-container-runtime 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 635d

01-master-kubelet 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 635d

01-worker-container-runtime 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 635d

01-worker-kubelet 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 635d

50-master-chrony 2.2.0 635d

50-worker-chrony 2.2.0 635d

97-master-generated-kubelet 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 69d

97-worker-generated-kubelet 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 69d

98-master-generated-kubelet 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 324d

98-worker-generated-kubelet 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 324d

99-master-ssh 3.2.0 635d

99-worker-generated-kubelet 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 97d

99-worker-generated-registries 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 635d

99-worker-ssh 3.2.0 635d

rendered-master-0c42f927476ca3dfcf0f21c42ffada1a 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 69d

rendered-master-0cf231fe40e2ee10a653f23e8d60ecbe e54335a3855fc53597e883ba98b548add39a8938 3.2.0 439d

rendered-master-17f080c2790200ba472aebf7b67bca4c e54335a3855fc53597e883ba98b548add39a8938 3.2.0 488d

rendered-master-1ec876f89fa31673bcd9206c12063264 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 12d

rendered-master-309954ecbf6cf1870ec75a7624b82ef9 e54335a3855fc53597e883ba98b548add39a8938 3.2.0 439d

rendered-master-34e30df059394127a0a12145b64a022a c29fe458496f7351a1e5c60e3af04d400a3d95c1 3.4.0 79d

Or in the OpenShift console at Compute > MachineConfigs.

Machine Config already exists

If there is already a Machine Config, let's redirect the current Machine Config to a YAML file.

oc get MachineConfig 50-worker-chrony --output yaml > 50-worker-chrony.yaml

The YAML file should contain something like this.

~]$ cat 50-worker-chrony.yaml

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

creationTimestamp: "2023-07-07T16:08:17Z"

generation: 1

labels:

machineconfiguration.openshift.io/role: worker

name: 50-worker-chrony

resourceVersion: "32675"

uid: 61e853ba-aca0-4d67-8991-840d1225393f

spec:

config:

ignition:

version: 2.2.0

storage:

files:

- contents:

source: data:text/plain;charset=utf-8;base64,cG9vbCAxLnJoZWwucG9vbC5udHAub3JnIGlidXJzdApkcmlmdGZpbGUgL3Zhci9saWIvY2hyb255L2RyaWZ0Cm1ha2VzdGVwIDEuMCAzCnJ0Y3N5bmMKa2V5ZmlsZSAvZXRjL2Nocm9ueS5rZXlzCmxlYXBzZWN0eiByaWdodC9VVEMKbG9nZGlyIC92YXIvbG9nL2Nocm9ueQo=

filesystem: root

mode: 420

path: /etc/chrony.conf

Notice source is a base64 encoded string. The base64 --decode command can be used to return plaintext. This is the current content of the /etc/chrony.conf file in the Machine Config.

~]# echo cG9vbCAxLnJoZWwucG9vbC5udHAub3JnIGlidXJzdApkcmlmdGZpbGUgL3Zhci9saWIvY2hyb255L2RyaWZ0Cm1ha2VzdGVwIDEuMCAzCnJ0Y3N5bmMKa2V5ZmlsZSAvZXRjL2Nocm9ueS5rZXlzCmxlYXBzZWN0eiByaWdodC9VVEMKbG9nZGlyIC92YXIvbG9nL2Nocm9ueQo= | base64 --decode

pool 1.rhel.pool.ntp.org iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

Create butane file

Let's create a butane file that contains the content of the chrony.conf file.

- version (4.16.0 in this example) should be your version of OpenShift

variant: openshift

version: 4.16.0

metadata:

name: 99-worker-chrony

labels:

machineconfiguration.openshift.io/role: worker

storage:

files:

- path: /etc/chrony.conf

mode: 0644

overwrite: true

contents:

inline: |

pool 1.rhel.pool.ntp.org iburst

driftfile /var/lib/chrony/drift

makestep 1.0

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

If you do not have the butane CLI, the apt-get install or dnf install or yum install command can be used to install the butane CLI.

dnf install butane

Then let's use the butane CLI to create a YAML file from the Butane file. The butane CLI creates an ignition file from a butane file. Check out my article OpenShift - Machine Config Ignition Files for more details about ignition.

butane 99-worker-chrony.bu --output 99-worker-chrony.yaml

The YAML file should contain something like this. Notice this includes an ignition version, 3.4.0 in this example.

~]$ cat 99-worker-chrony.yaml

# Generated by Butane; do not edit

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: worker

name: 99-worker-chrony

spec:

config:

ignition:

version: 3.4.0

storage:

files:

- contents:

compression: gzip

source: data:;base64,H4sIAAAAAAAC/yzM0Q3CMAwE0H9PkQkcOgMrwABt6qZWTBw5BilMj4D8nXTvrqlKWNBOEvxmrN5QLQfentYdduPDDxYK8bVaFN5iOk3riL8GHmuh7tTCghcwT33UBIXGf0KeJsdCo4PQ2jolfwfjfHq8364gmne2ea95evgEAAD//1t6cmmZAAAA

mode: 420

overwrite: true

path: /etc/chrony.conf

Update the Machine Config

And then issue the following command to update the Machine Config.

oc apply --filename 99-worker-chrony.yaml

There should now be a Machine Config named 99-worker-chrony.

~]$ oc get MachineConfig 99-worker-chrony

NAME GENERATEDBYCONTROLLER IGNITIONVERSION AGE

99-worker-chrony 3.4.0 13s

And the state of the worker Machine Config Pool should be Updating.

~]$ oc get MachineConfigPools

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

master rendered-master-1ec876f89fa31673bcd9206c12063264 True False False 3 3 3 0 635d

worker rendered-worker-0ed1cb20db859faef7f01f41079c21b2 False True False 10 0 0 0 635d

The machine-config Operator is the component that detects that a change was made to a Machine Config and kicks off the process of updating the Machine Config Pool.

~]$ oc get clusteroperator machine-config

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

machine-config 4.16.30 True False False 182d

Once the Machine Config Pool has Updated via the machine-config Operator, the worker Machine Config Pool should have a new rendered Machine Config.

~]$ oc get MachineConfigPools

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

master rendered-master-1ec876f89fa31673bcd9206c12063264 True False False 3 3 3 0 635d

worker rendered-worker-9c6d2a445989d365bfd5ba8d4c6b4aef True False False 10 10 10 0 635d

And the new rendered Machine Config should indeed be new, only 30 minutes old in this example.

~]$ oc get MachineConfig rendered-worker-9c6d2a445989d365bfd5ba8d4c6b4aef

NAME GENERATEDBYCONTROLLER IGNITIONVERSION AGE

rendered-worker-9c6d2a445989d365bfd5ba8d4c6b4aef 64460169e27091d8f8373b0952604ba2700d6d67 3.4.0 30m

Are we good?

Let's check the machine-config Cluster Operator. If the Operator is degraded and has some error message, we are not good. Check out my article OpenShift - Resolve cluster operator degraded for more details on degraded.

~]$ oc get clusteroperator machine-config

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

machine-config 4.16.30 True False True 182d Failed to resync 4.16.30 because: error during syncRequiredMachineConfigPools: [context deadline exceeded, failed to update clusteroperator: [client rate limiter Wait returned an error: context deadline exceeded, error MachineConfigPool worker is not ready, retrying. Status: (pool degraded: true total: 10, ready 8, updated: 8, unavailable: 1)]]

Let's check the Machine Config Pool. If the worker pool is degraded, we are not good. Notice in this example that the issue is that the worker Machine Config Pool is supposed to have 10 machines but there are only 8 machines that are ready.

~]$ oc get machineconfigpools

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

master rendered-master-1ec876f89fa31673bcd9206c12063264 True False False 3 3 3 0 636d

worker rendered-worker-9c6d2a445989d365bfd5ba8d4c6b4aef False True True 10 8 8 1 636d

Viewing the worker Machine Config Pool YAML may have a bit more details on what the underlying issue here may be, perhaps something like this.

~]$ oc get machineconfigpool worker --output yaml

- lastTransitionTime: "2025-04-03T03:01:40Z"

message: 'Node my-cluster-infra-s2xtt is reporting: "failed to drain node:

my-cluster-infra-s2xtt after 1 hour. Please see machine-config-controller

logs for more information"'

reason: 1 nodes are reporting degraded status on sync

status: "True"

type: NodeDegraded

Notice in the above example that the issue has something to do with the node named my-cluster-infra-s2xtt. Perhaps the node is misconfigured. In this example, the node has scheduling disabled.

~]$ oc get nodes

NAME STATUS ROLES AGE VERSION

my-cluster-edge-78v55 Ready infra,worker 633d v1.29.10+67d3387

my-cluster-edge-tk6gm Ready infra,worker 633d v1.29.10+67d3387

my-cluster-infra-7hpsl Ready infra,worker 633d v1.29.10+67d3387

my-cluster-infra-jld8v Ready infra,worker 633d v1.29.10+67d3387

my-cluster-infra-wxjgn Ready infra,worker 633d v1.29.10+67d3387

my-cluster-master-0 Ready master 636d v1.29.10+67d3387

my-cluster-master-1 Ready master 636d v1.29.10+67d3387

my-cluster-master-2 Ready master 636d v1.29.10+67d3387

my-cluster-netobserv-infra-4wdpp Ready infra,worker 182d v1.29.10+67d3387

my-cluster-netobserv-infra-s2xtt Ready,SchedulingDisabled infra,worker 182d v1.29.10+67d3387

my-cluster-netobserv-infra-xwws4 Ready infra,worker 182d v1.29.10+67d3387

my-cluster-worker-fttdz Ready compute,worker 16h v1.29.10+67d3387

my-cluster-worker-jttdr Ready compute,worker 16h v1.29.10+67d3387

Thus, perhaps the node just needs to be uncordoned.

oc adm uncordon my-cluster-netobserv-infra-s2xtt

debug node

You can start a debug node and validate that the /etc/chrony.conf file in the node is an exact match of the chrony.conf file in the Machine Config.

~]$ oc debug node/my-cluster-worker-52wkr

Temporary namespace openshift-debug-7x9l9 is created for debugging node...

Starting pod/my-cluster-worker-52wkr-debug-lwgxb ...

To use host binaries, run `chroot /host`

Pod IP: 10.84.188.86

If you don't see a command prompt, try pressing enter.

sh-5.1# chroot /host

sh-5.1# cat /etc/chrony.conf

pool 1.rhel.pool.ntp.org iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

sh-5.1# exit

exit

Removing debug pod ...

Temporary namespace openshift-debug-7x9l9 was removed.

Did you find this article helpful?

If so, consider buying me a coffee over at