This assumes you have already

- Installed the OpenShift API for Data Protection (OADP) Operator

- Created OpenShift API for Data Protection (OADP) backup Storage Location

Let's say you want to use OpenShift API for Data Protection (OADP) to backup images and store the backups in an Amazon Web Services S3 Bucket.

Let's create a YAML file that contains the following, replacing my-bucket-asdfadkjsfasfljdf with the name of your S3 Bucket. Notice in this example that backupImages: true is specified. When backupImages: true is specified then you must also specify a prefix, prefix: my-prefix in this example.

apiVersion: oadp.openshift.io/v1alpha1

kind: DataProtectionApplication

metadata:

name: my-data-protection-application

namespace: openshift-adp

spec:

backupImages: true

backupLocations:

- name: my-backup-storage-location

velero:

default: true

config:

region: us-east-1

profile: default

credential:

key: cloud

name: cloud-credentials

objectStorage:

bucket: my-bucket-asdfadkjsfasfljdf

prefix: my-prefix

provider: aws

configuration:

velero:

defaultPlugins:

- openshift

- aws

nodeSelector: worker

resourceTimeout: 10m

Let's use the oc apply command to create my-data-protection-application.

oc apply --filename my-data-protection-application.yaml

Let's ensure the status is Reconciled.

~]$ oc describe DataProtectionApplication my-data-protection-application --namespace openshift-adp

. . .

Status:

Conditions:

Last Transition Time: 2025-04-10T01:25:11Z

Message: Reconcile complete

Reason: Complete

Status: True

Type: Reconciled

The Data Protection Application should provision additional "velero" resources in the openshift-adp namespace.

~]$ oc get all --namespace openshift-adp

NAME READY STATUS RESTARTS AGE

pod/openshift-adp-controller-manager-55f68b778f-tlr8v 1/1 Running 0 8m52s

pod/velero-6777878978-nvqm4 1/1 Running 0 3m10s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/openshift-adp-controller-manager-metrics-service ClusterIP 172.30.220.147 <none> 8443/TCP 9m3s

service/openshift-adp-velero-metrics-svc ClusterIP 172.30.87.161 <none> 8085/TCP 3m10s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/openshift-adp-controller-manager 1/1 1 1 8m52s

deployment.apps/velero 1/1 1 1 3m10s

NAME DESIRED CURRENT READY AGE

replicaset.apps/openshift-adp-controller-manager-55f68b778f 1 1 1 8m52s

replicaset.apps/velero-6777878978 1 1 1 3m10s

Next let's check to see if the backupStorageLocation is Available.

~]$ oc get backupStorageLocations --namespace openshift-adp

NAME PHASE LAST VALIDATED AGE DEFAULT

my-backup-storage-location Available 60s 64s true

Now let's say you want to backup the resources and images used by the resources in namespace my-project. Let's create a velero backup resource that will backup the resources and images used by the resources in namespace my-project. Let's say this markup is in a file named my-project-backup.yml. By default, the Time To Live (TTL) will be 30 days, which means the backup will be retained for 30 days. Once the backup has exceeded 30 days, the backup will be removed from the storage location.

apiVersion: velero.io/v1

kind: Backup

metadata:

name: my-project

labels:

velero.io/storage-location: my-backup-storage-location

namespace: openshift-adp

spec:

hooks: {}

includedNamespaces:

- my-project

includedResources: []

excludedResources: []

storageLocation: my-backup-storage-location

Let's use the oc apply command to create the velero backup resource.

~]$ oc apply --filename my-project-backup.yml

backup.velero.io/my-project created

There should now be a backup resource named my-project in the openshift-adp namespace.

~]$ oc get backups --namespace openshift-adp

NAME AGE

my-project 102s

If you then describe the backup resource named my-project the status should be InProgress.

~]$ oc describe backup my-project --namespace openshift-adp

Status:

Expiration: 2025-05-16T01:25:16Z

Format Version: 1.1.0

Hook Status:

Phase: InProgress

Progress:

Items Backed Up: 18

Total Items: 18

Start Timestamp: 2025-04-16T01:25:13Z

Version: 1

Events: <none>

And then shortly thereafter, the status should be Completed. Awesome!

Notice also that the Time To Live (TTL) is 30 days which means the backup will be retained for 30 days and Expiration is 30 days from when the backup was created. In 30 days, the items that were backed up will be removed from the storage location.

~]$ oc describe backup my-project --namespace openshift-adp

Spec:

Ttl: 30d

Status:

Completion Timestamp: 2025-04-16T01:25:16Z

Expiration: 2025-05-16T01:25:16Z

Format Version: 1.1.0

Hook Status:

Phase: Completed

Progress:

Items Backed Up: 18

Total Items: 18

Start Timestamp: 2025-04-16T01:25:13Z

Version: 1

Events: <none>

Recall in this example that OADP was configured to store the backups in an Amazon Web Services (AWS) S3 Bucket named my-bucket-asdfadkjsfasfljdf. The aws s3api list-objects command can be used to list the objects in the S3 Bucket. Something like this should be returned, where there are my-project objects in the S3 Bucket. Awesome, it works!

~]$ aws s3api list-objects --bucket my-bucket-asdfadkjsfasfljdf --profile velero | jq .Contents[].Key

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-csi-volumesnapshotclasses.json.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-csi-volumesnapshotcontents.json.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-csi-volumesnapshots.json.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-itemoperations.json.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-logs.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-podvolumebackups.json.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-resource-list.json.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-results.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-volumeinfo.json.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051-volumesnapshots.json.gz"

"my-prefix/backups/my-project-20250522010051/my-project-20250522010051.tar.gz"

"my-prefix/backups/my-project-20250522010051/velero-backup.json"

The aws s3api get-object command can be used to download one of the files in the S3 bucket to your present working directory.

aws s3api get-object --bucket my-bucket-asdfadkjsfasfljdf --profile velero --key my-prefix/backups/my-project-20250522010051/my-project-20250522010051.tar.gz resources.tar.gz

The tar command can be used to list the files in the gzip compressed tar archive. In this example my-project-20250522010051.tar.gz contains a handful of python version 3.11 image streams. An image stream doesn't have anything to do with "streaming" services like Spotify (to stream music) or Netflix (to stream movies). Instead, an image stream will list similar images, for example similar Python images or similar Node.js images.

~]# tar -tf resources.tar.gz

resources/imagetags.image.openshift.io/namespaces/my-project/python-311:latest.json

resources/imagetags.image.openshift.io/v1-preferredversion/namespaces/my-project/python-311:latest.json

resources/imagetags.image.openshift.io/namespaces/my-project/python-311:v4.7.0-202205312157.p0.g7706ed4.assembly.stream.json

resources/imagetags.image.openshift.io/v1-preferredversion/namespaces/my-project/python-311:v4.7.0-202205312157.p0.g7706ed4.assembly.stream.json

resources/imagestreams.image.openshift.io/namespaces/my-project/python-311.json

resources/imagestreams.image.openshift.io/v1-preferredversion/namespaces/my-project/python-311.json

resources/imagestreamtags.image.openshift.io/namespaces/my-project/python-311:latest.json

resources/imagestreamtags.image.openshift.io/v1-preferredversion/namespaces/my-project/python-311:latest.json

resources/imagestreamtags.image.openshift.io/namespaces/my-project/ose-hello-openshift-rhel8:v4.7.0-202205312157.p0.g7706ed4.assembly.stream.json

resources/imagestreamtags.image.openshift.io/v1-preferredversion/namespaces/my-project/python-311:v4.7.0-202205312157.p0.g7706ed4.assembly.stream.json

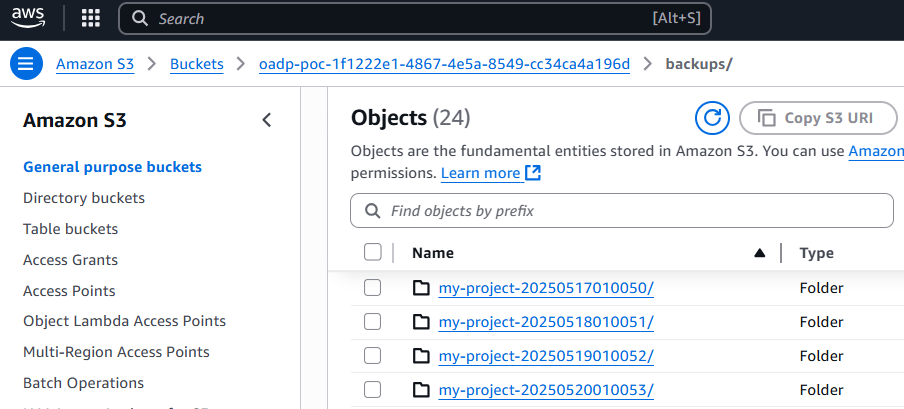

Or you can view the backups in the Amazon Web Services (AWS) S3 console.

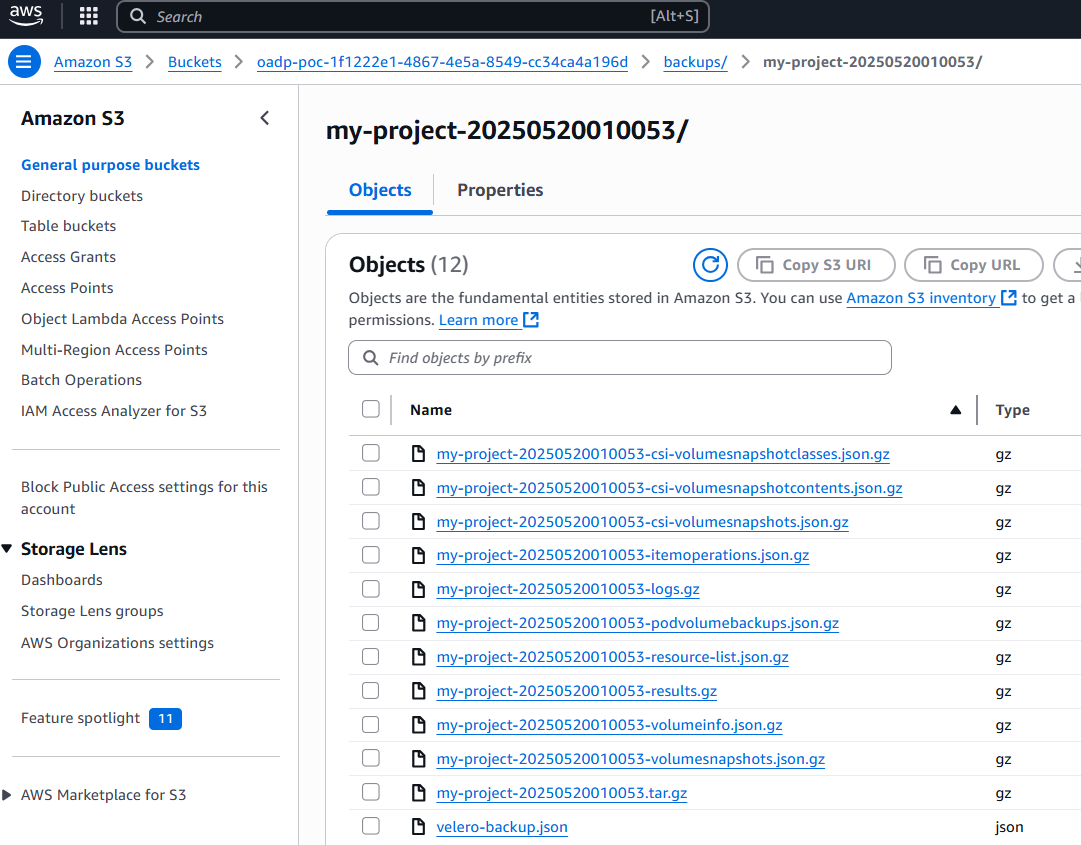

And selecting one of the backup folders should display a gzip compressed file for the various resources that were backed up.

Did you find this article helpful?

If so, consider buying me a coffee over at