Event Router fetches the events from all namespaces in the OpenShift cluster and stores them in one or more pods named eventrouter in the openshift-logging namespace. Often, a logging subsystem such as Loki will then fetch the logs from the eventrouter pods in the openshift-logging namespace to be passed onto an observability system, such as Kibana.

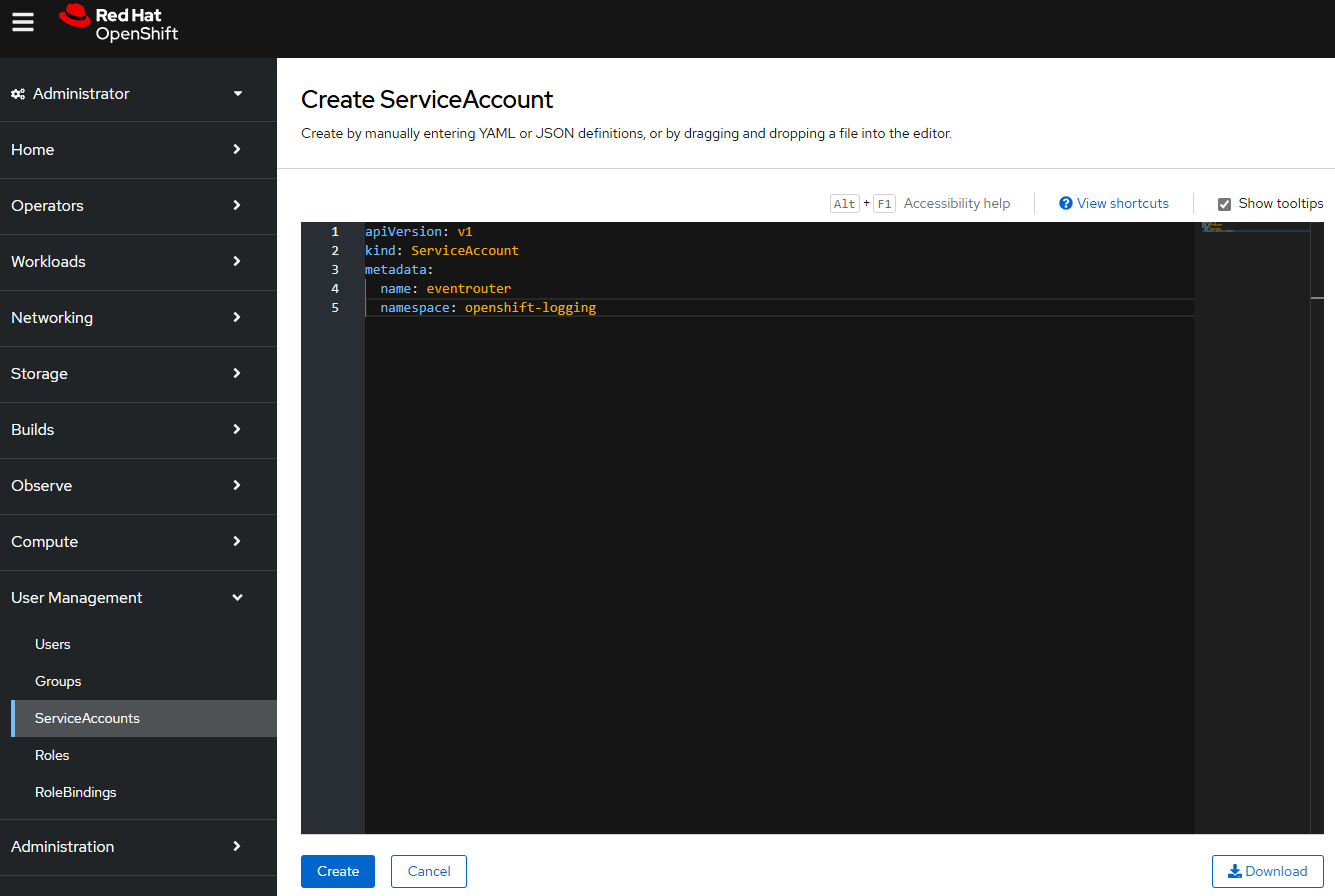

Create the eventrouter service account

First let's create a Service Account named eventrouter in the openshift-logging namespace. For example, let's say you have the following in a file named service_account.yml.

apiVersion: v1

kind: ServiceAccount

metadata:

name: eventrouter

namespace: openshift-logging

The oc apply command can be used to create the service account.

oc apply -f service_account.yml

Or in the OpenShift console, at User Management > ServiceAccounts > Create Service Account.

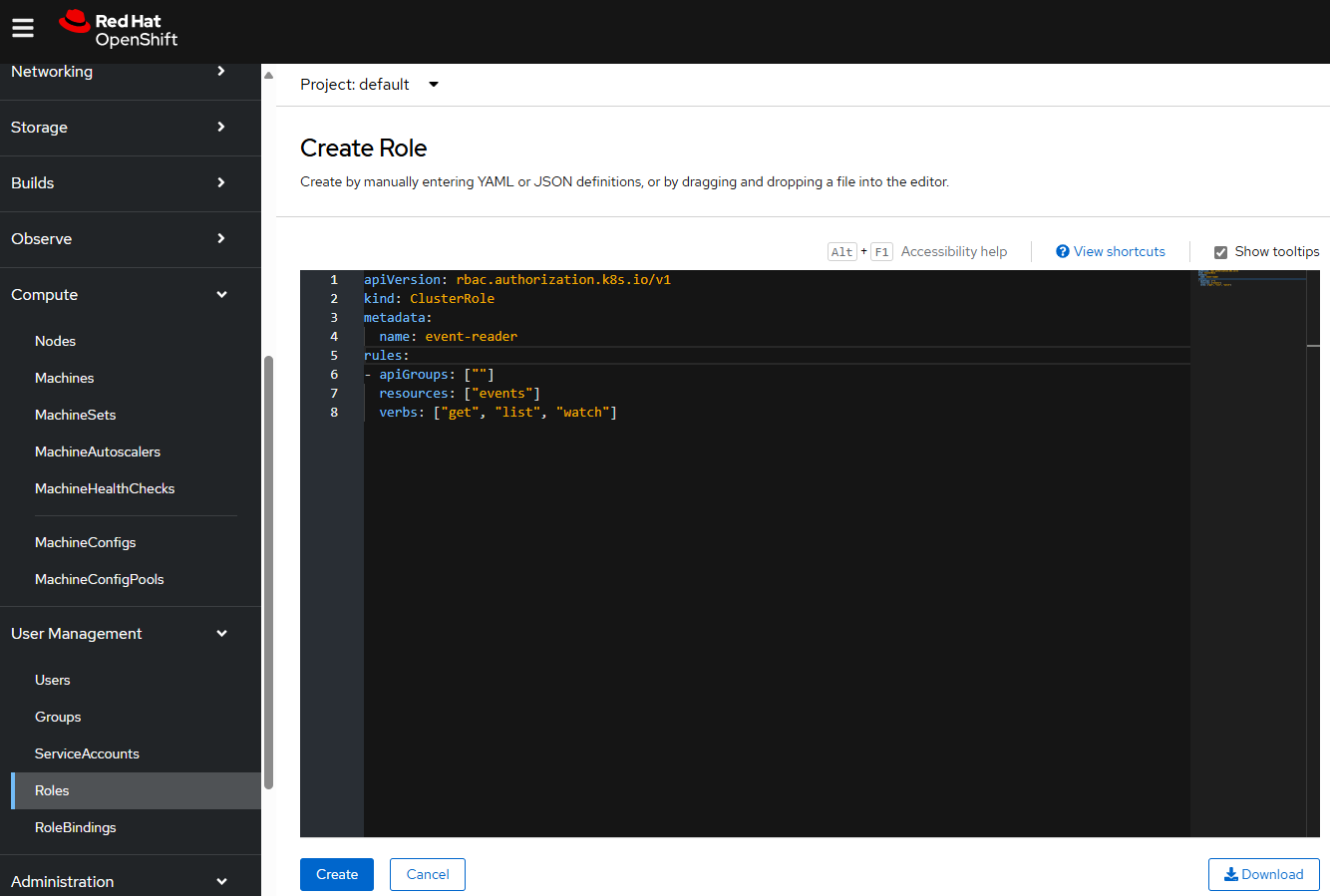

Create the Cluster Role

Next let's create a Cluster Role. For example, let's say you have the following in a file named cluster_role.yml.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: event-reader

rules:

- apiGroups: [""]

resources: ["events"]

verbs: ["get", "list", "watch" ]

The oc apply command can be used to create the cluster role.

oc apply -f cluster_role.yml

Or in the OpenShift console, as Administrator, at User Management > Roles > Create Role.

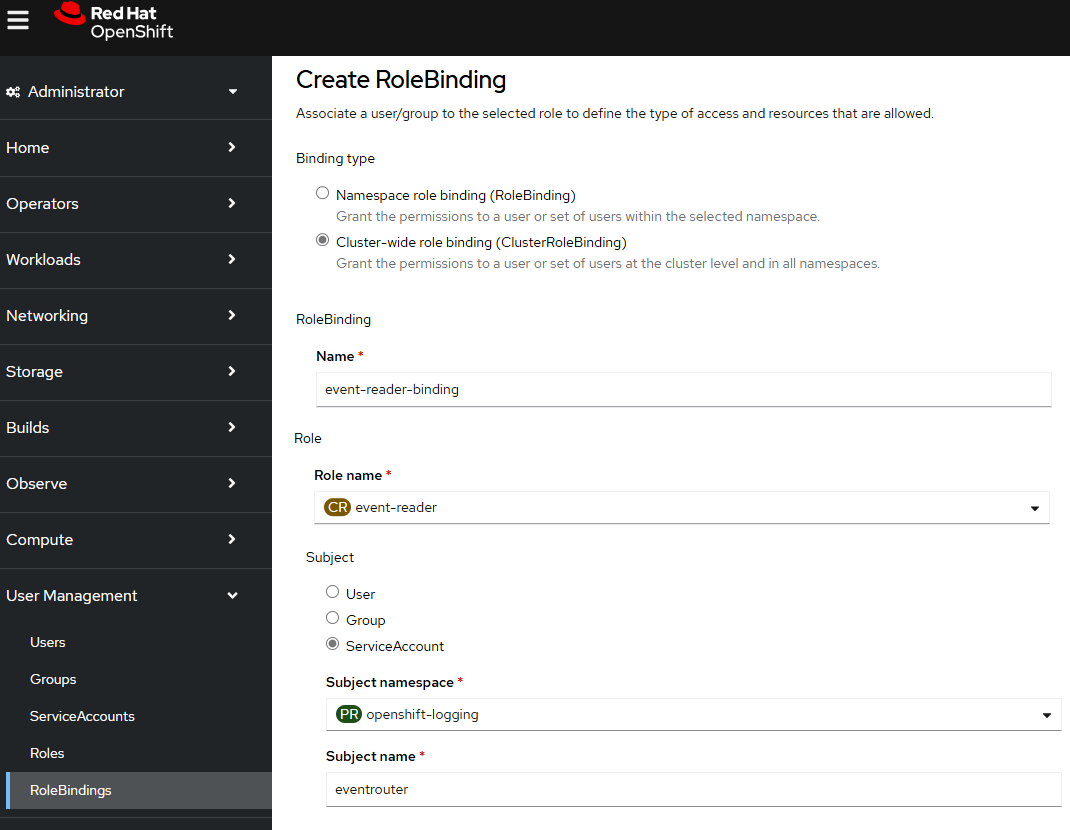

Create the Cluster Role Binding

Next let's create a Cluster Role Binding. For example, let's say you have the following in a file named cluster_role_binding.yml.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: event-reader-binding

subjects:

- kind: ServiceAccount

name: eventrouter

namespace: openshift-logging

roleRef:

kind: ClusterRole

name: event-reader

The oc apply command can be used to create the cluster role binding.

oc apply -f cluster_role_binding.yml

Or in the OpenShift console, at User Management > RoleBindings, create the Cluster Role Binding.

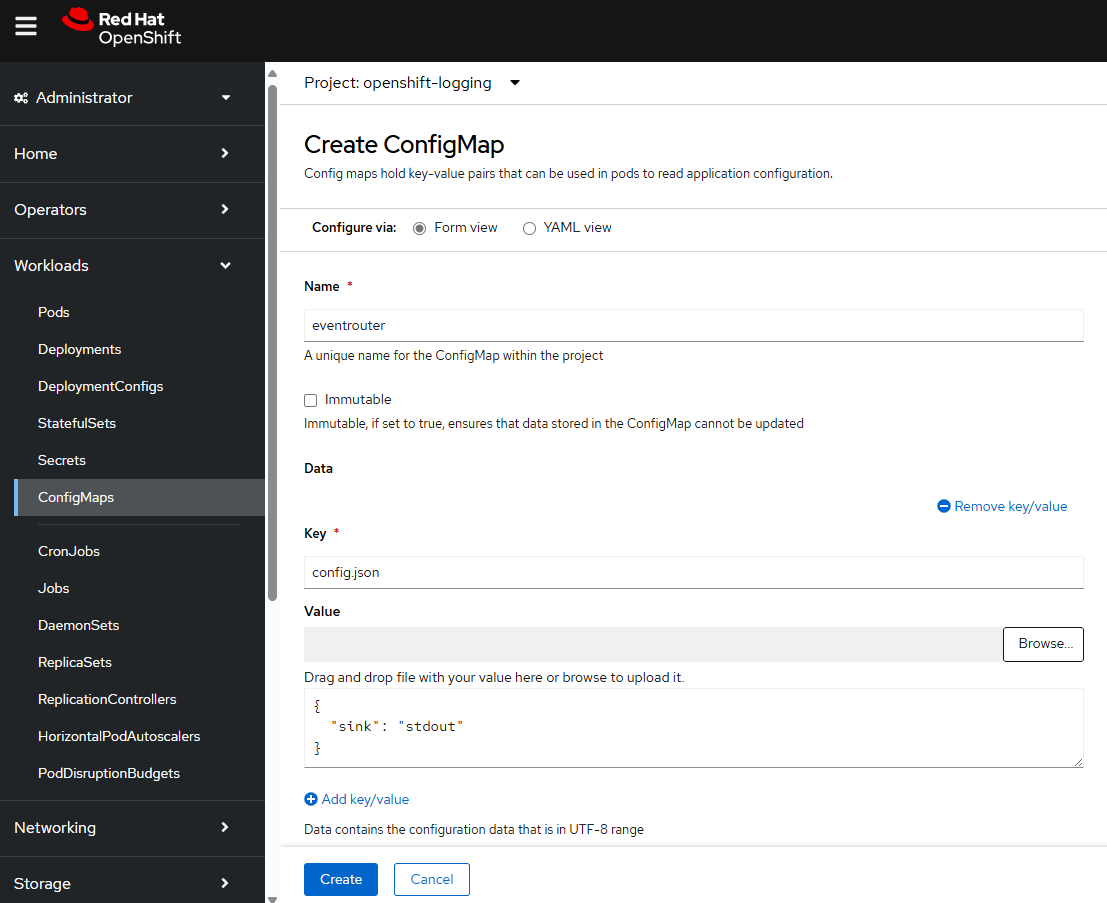

Create the Config Map

Next let's create a Config Map. For example, let's say you have the following in a file named config_map.yml.

apiVersion: v1

kind: ConfigMap

metadata:

name: eventrouter

namespace: openshift-logging

data:

config.json: |-

{

"sink": "stdout"

}

The oc apply command can be used to create the config map.

oc apply -f config_map.yml

Or in the OpenShift console, at Workloads > ConfigMaps > Create ConfigMap.

Create the Deployment

Next let's create the eventrouter deployment in the openshift-logging namespace. For example, let's say you have the following in a file named deployment.yml. The deployment will mount the config.json file in the config map to /etc/eventrouter/config.json in the container. The container is also run using the eventrouter service account which maps to the cluster role binding which maps to the cluster role which provides get, list, and watch permissions for events.

apiVersion: apps/v1

kind: Deployment

metadata:

name: eventrouter

namespace: openshift-logging

labels:

component: "eventrouter"

logging-infra: "eventrouter"

provider: "openshift"

spec:

selector:

matchLabels:

component: "eventrouter"

logging-infra: "eventrouter"

provider: "openshift"

replicas: 1

template:

metadata:

labels:

component: "eventrouter"

logging-infra: "eventrouter"

provider: "openshift"

name: eventrouter

spec:

serviceAccount: eventrouter

containers:

- name: kube-eventrouter

image: "registry.redhat.io/openshift-logging/eventrouter-rhel8:v0.4"

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: "100m"

memory: "128Mi"

volumeMounts:

- name: config-volume

mountPath: /etc/eventrouter

volumes:

- name: config-volume

configMap:

name: eventrouter

The oc apply command can be used to create the deployment.

oc apply -f deployment.yml

Viewing Logs

The oc get pods command can be used to confirm that there is now an eventrouter pod in the openshift-logging namespace.

~]# oc get pods --namespace openshift-logging

NAME READY STATUS RESTARTS AGE

cluster-logging-eventrouter-d649f97c8-qvv8r 1/1 Running 0 8d

And the oc logs command can be used to view the logs in the pod.

oc logs pod/cluster-logging-eventrouter-d649f97c8-qvv8r --namespace openshift-logging

Which should return something like this which shows the pod is logging events. Nice!

{"verb":"ADDED","event":{"metadata":{"name":"openshift-service-catalog-controller-manager-remover.1632d931e88fcd8f","namespace":"openshift-service-catalog-removed","selfLink":"/api/v1/namespaces/openshift-service-catalog-removed/events/openshift-service-catalog-controller-manager-remover.1632d931e88fcd8f","uid":"787d7b26-3d2f-4017-b0b0-420db4ae62c0","resourceVersion":"21399","creationTimestamp":"2020-09-08T15:40:26Z"},"involvedObject":{"kind":"Job","namespace":"openshift-service-catalog-removed","name":"openshift-service-catalog-controller-manager-remover","uid":"fac9f479-4ad5-4a57-8adc-cb25d3d9cf8f","apiVersion":"batch/v1","resourceVersion":"21280"},"reason":"Completed","message":"Job completed","source":{"component":"job-controller"},"firstTimestamp":"2020-09-08T15:40:26Z","lastTimestamp":"2020-09-08T15:40:26Z","count":1,"type":"Normal"}}

An example scenario

Let's say there is a pod in namespace my-project. The oc get pods command

~]$ oc get pods --namespace my-project

NAME READY STATUS RESTARTS AGE

pod/my-app-5ffbb5f99-b44dp 1/1 Running 0 7d18h

Let's delete the pod so that a new pod is spawned.

oc delete pod/my-app-5ffbb5f99-b44dp --namespace my-project

There should now be events. It's important to recognize that the events will only remain for a period of time, such as a few hours. Without Event Router, after a few hours, the events will be purged. With Event Router, the events will be available in the Event Router pod logs.

~]$ oc get events --namespace my-project

LAST SEEN TYPE REASON OBJECT MESSAGE

34s Normal Killing pod/my-app-5ffbb5f99-b44dp Stopping container my-container

33s Normal Scheduled pod/my-app-5ffbb5f99-rfv8b Successfully assigned my-project/my-app-5ffbb5f99-rfv8b to my-worker-jchnk

33s Normal AddedInterface pod/my-app-5ffbb5f99-rfv8b Add eth0 [10.11.12.13/23] from ovn-kubernetes

33s Normal Pulled pod/my-app-5ffbb5f99-rfv8b Container image "registry.digital.example.com/python:3.12.4-slim" already present on machine

33s Normal Created pod/my-app-5ffbb5f99-rfv8b Created container my-container

33s Normal Started pod/my-app-5ffbb5f99-rfv8b Started container my-container

34s Normal SuccessfulCreate replicaset/my-app-5ffbb5f99 Created pod: my-app-5ffbb5f99-rfv8b

For example, this command can be used to list the events in one of the Event Router pod logs that contain my-project.

~]$ pod=$(oc get pods --namespace openshift-logging | grep -v ^NAME | grep eventrouter | head -1 | awk '{print $1}'); oc logs $pod --namespace openshift-logging | grep -i my-project | jq

Which should return something like this, where there is an event that matches the same output as the "oc get events --namespace my-project" command, something like this.

{

"verb": "ADDED",

"event": {

"metadata": {

"name": "my-app-5ffbb5f99-b44dp.184e64967c3933e3",

"namespace": "my-project",

"uid": "3fc7678b-4af3-4058-a43e-a9aca9f9e498",

"resourceVersion": "565808181",

"creationTimestamp": "2025-07-02T09:18:22Z",

"managedFields": [

{

"manager": "kubelet",

"operation": "Update",

"apiVersion": "v1",

"time": "2025-07-02T09:18:22Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:count": {},

"f:firstTimestamp": {},

"f:involvedObject": {},

"f:lastTimestamp": {},

"f:message": {},

"f:reason": {},

"f:reportingComponent": {},

"f:reportingInstance": {},

"f:source": {

"f:component": {},

"f:host": {}

},

"f:type": {}

}

}

]

},

"involvedObject": {

"kind": "Pod",

"namespace": "my-project",

"name": "my-app-5ffbb5f99-b44dp",

"uid": "2b1832ea-dd2c-4236-946b-4d0c4b465e13",

"apiVersion": "v1",

"resourceVersion": "558804670",

"fieldPath": "spec.containers{my-container}"

},

"reason": "Killing",

"message": "Stopping container my-container",

"source": {

"component": "kubelet",

"host": "my-worker-jchnk"

},

"firstTimestamp": "2025-07-02T09:18:22Z",

"lastTimestamp": "2025-07-02T09:18:22Z",

"count": 1,

"type": "Normal",

"eventTime": null,

"reportingComponent": "kubelet",

"reportingInstance": "my-worker-jchnk"

}

}

Or like this, to just get the event messages.

~]$ pod=$(oc get pods --namespace openshift-logging | grep -v ^NAME | grep eventrouter | head -1 | awk '{print $1}'); oc logs $pod --namespace openshift-logging | grep -i my-project | jq .event.message

"Stopping container my-container"

"Created pod: my-app-5ffbb5f99-rfv8b"

"Successfully assigned my-project/my-app-5ffbb5f99-rfv8b to my-worker-jchnk"

"Add eth0 [10.11.12.13/23] from ovn-kubernetes"

"Container image \"registry.example.com/python:3.12.4-slim\" already present on machine"

"Created container my-container"

"Started container my-container"

Did you find this article helpful?

If so, consider buying me a coffee over at